kubernetes是google公司基于docker所做的一个分布式集群,有以下主件组成

etcd: 高可用存储共享配置和服务发现,作为与minion机器上的flannel配套使用,作用是使每台 minion上运行的docker拥有不同的ip段,最终目的是使不同minion上正在运行的docker containner都有一个与别的任意一个containner(别的minion上运行的docker containner)不一样的IP地址。

flannel: 网络结构支持

kube-apiserver: 不论通过kubectl还是使用remote api 直接控制,都要经过apiserver

kube-controller-manager: 对replication controller, endpoints controller, namespacecontroller, andserviceaccounts controller的循环控制,与kube-apiserver交互,保证这些controller工作

kube-scheduler: Kubernetesscheduler的作用就是根据特定的调度算法将pod调度到指定的工作节点(minion)上,这一过程也叫绑定(bind)

kubelet: Kubelet运行在Kubernetes Minion Node上. 它是container agent的逻辑继任者

kube-proxy: kube-proxy是kubernetes 里运行在minion节点上的一个组件, 它起的作用是一个服务代理的角色

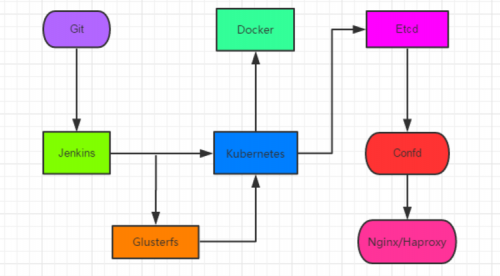

图为GIT+Jenkins+Kubernetes+Docker+Etcd+confd+Nginx+Glusterfs架构:

如下:

环境:

centos7系统机器两台:

[root@node6717pods]#yum update

升级后的版本为:

[root@node6717 pods]# cat/etc/redhat-release

CentOS Linux release 7.6.1810(Core)

[root@node6717 pods]# uname -a

Linux node67173.10.0-514.el7.x86_64 #1 SMP Tue Nov 2216:42:41 UTC 2016 x86_64 x86_64 x86_64GNU/Linux

192.168.67.17: 用来安装kubernetesmaster

192.168.99.160: 用作kubernetes minion (minion1)

安装docker

yum install docker-io

修改并关闭选项:

vim /etc/sysconfig/docker

OPTIONS=' --log-driver=journald--signature-verification=false'

[root@k8s-master java-mvn]# cat/etc/docker/daemon.json

{"insecure-registries":["192.168.66.142:5000"]}

一、关闭系统运行的防火墙及selinux

1。如果系统开启了防火墙则按如下步骤关闭防火墙(所有机器)

systemctl stopfirewalld && systemctl disablefirewalld&& systemctl mask firewalld

2.关闭selinux

#setenforce 0

[root@master ~]# sed -i's/^SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

二、MASTER安装配置

1. 安装并配置Kubernetes master(yum 方式)

# yum -y install etcd kubernetes flannel docker-io lrzszvimlsof tree wget git

systemctl enable etcd kube-apiserverkube-controller-managerkube-scheduler flanneld docker

配置etcd

确保列出的这些项都配置正确并且没有被注释掉,下面的配置都是如此

#vim /etc/etcd/etcd.conf

ETCD_NAME=default

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.67.17:2379"

systemctl enable etcd

systemctl start etcd

ss -antl 检查2379是否成功

[root@k8s-master ~]# lsof-i:2379

COMMAND PIDUSER FD TYPE DEVICE SIZE/OFF NODE NAME

etcd 22060etcd 6u IPv6640833 0t0 TCP *:2379 (LISTEN)

etcd 22060etcd 10u IPv4640839 0t0 TCPlocalhost:49662->localhost:2379(ESTABLISHED)

etcd 22060etcd 12u IPv6640840 0t0 TCPlocalhost:2379->localhost:49662(ESTABLISHED)

配置kubernetes

vim /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBELET_PORT="--kubelet_port=10250"

KUBE_ETCD_SERVERS="--etcd_servers=http://192.168.67.17:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16--service-node-port-range=1-65535"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

#将SecurityContextDeny,ServiceAccount参数删除

KUBE_API_ARGS=""

vim /etc/kubernetes/controller-manager

###

# The following values are usedto configure thekubernetes controller-manager

# defaults from config andapiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--node-monitor-grace-period=10s--pod-eviction-timeout=10s"

vim /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://192.168.67.17:8080"

===============================================

2.设置etcd网络

[root@node6717 kubernetes]#etcdctl -C"http://192.168.67.17:2379" set /atomic.io/network/config'{"Network":"10.1.0.0/16"}'

{"Network":"10.1.0.0/16"}

3. 启动etcd, kube-apiserver, kube-controller-managerandkube-scheduler,docker,flanneld服务

mkdir -p /server/shell_scripts/k8s

vim master_daemon_start.sh

#!/bin/bash

for SERVICES in etcdkube-apiserverkube-controller-manager kube-scheduler flanneld docker

do

systemctl restart $SERVICES

systemctl status $SERVICES

done

chmod755 master_daemon_start.sh

或

[root@Control k8s]# cat master_start.sh

systemctl enablekube-apiserverkube-scheduler kube-controller-manager etcd flanneld dockersystemctl startkube-apiserver kube-scheduler kube-controller-manageretcd flanneld dockerss -antl 检查8080是否成功

4. 至此master配置完成,运行kubectl get nodes可以查看有多少minion在运行,以及其状态。这里我们的minion还都没有开始安装配置,所以运行之后结果为空

# kubectl get nodes

NAME LABELS STATUS

三、MINION安装配置(每台minion机器都按如下安装配置)

1. 环境安装和配置

# yum -y install flannelkubernetes docker-io

systemctlenableflanneld docker kube-proxy kubelet

配置kubernetes连接的服务端IP

#vim /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://192.168.67.17:8080"

KUBE_ETCD_SERVERS="--etcd_servers=http://192.168.67.17:2379"

配置kubernetes ,(请使用每台minion自己的IP地址比如192.168.99.160:代替下面的$LOCALIP)

#vim /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_PORT="--port=10250"

# change the hostname to thishost’sIP address

KUBELET_HOSTNAME="--hostname_override=$LOCALIP"

KUBELET_API_SERVER="--api_servers=http://192.168.67.17:8080"

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

KUBELET_ARGS=""

2. 准备启动服务(如果本来机器上已经运行过docker的请看过来,没有运行过的请忽略此步骤)

运行ifconfig,查看机器的网络配置情况(有docker0)

# ifconfig docker0

Link encap:Ethernet HWaddr02:42:B2:75:2E:67 inet addr:172.17.0.1Bcast:0.0.0.0 Mask:255.255.0.0 UP

BROADCAST MULTICAST MTU:1500Metric:1 RX packets:0 errors:0dropped:0 overruns:0 frame:0 TX packets:0

errors:0 dropped:0 overruns:0carrier:0 collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0(0.0 B)

warning:在运行过docker的机器上可以看到有docker0,这里在启动服务之前需要删掉docker0配置,在命令行运行:sudo ip linkdelete docker0

3.配置flannel网络

#vim /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.67.17:2379"

PS:其中atomic.io与上面etcd中的Network对应

FLANNEL_ETCD_PREFIX="/atomic.io/network"

启动flanenld:

systemctl enable flanneld

systemctl restart flanneld

启动docker:

systemctlenable docker

systemctlstart docker

4. 启动服务

mkdir -p /server/shell_scripts/k8s

vim slave_daemon_start.sh

#!/bin/bash

for SERVICES in flanneld kube-proxy kubeletdocker

do

systemctl restart $SERVICES

systemctl status $SERVICES

done

chmod 755 master_daemon_start.sh

四、配置完成验证安装

确定两台minion(192.168.99.160)和一台master(192.168.67.17)都已经成功的安装配置并且服务都已经启动了。

切换到master机器上,运行命令kubectl get nodes

# kubectl get nodes

NAME STATUS AGE

192.168.99.160 Ready 1m

可以看到配置的 minion已经在master的node列表中了。如果想要更多的node,只需要按照minion的配置,配置更多的机器就可以了。

在每个node节点,你应当注意到你有两块新的网卡docker0 和 flannel0。你应该得到不同的ip地址范围在flannel0上,就像下面这样:

docker0:flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.1.33.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether 02:42:d1:50:5b:f2 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions0

enp2s0:flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.99.160 netmask 255.255.128.0 broadcast192.168.127.255

inet6 fe80::3915:263a:cea4:fe8e prefixlen 64 scopeid0x20<link>

ether 4c:cc:6a:89:34:32 txqueuelen 1000 (Ethernet)

RX packets 15683491 bytes 2396283424 (2.2 GiB)

RX errors 0 dropped 1 overruns 0 frame 0

TX packets 588362 bytes 61413948 (58.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions0

flannel0:flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.1.33.0 netmask 255.255.0.0 destination 10.1.33.0

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions0

至此,kubernetes集群已经配置并运行了,我们可以继续下面的步骤。

设置service的nodeport以后外部无法访问对应的端口的问题 每台master和minion都需要安装:

FAQ:

设置service的nodeport以后外部无法访问对应的端口的问题

关于Service 设置为NodePort 模式后导致外部无法访问对应的端口问题,查看下node节点上已经出现了30002端口的监听,确保服务器外部安全组等限制已经放行该端口,按照网上搜到的教程配置基本没看到要配置iptables相关的问题。

然后查阅了不少资料才看到docker 1.13 版本对iptables的规则进行了改动,默认FORWARD 链的规则为DROP,带来的问题主要一旦DROP后,不同主机间就不能正常通信了(kubernetes的网络使用flannel的情况)。

查看下node节点上的iptables规则:

[root@k8s-master~]#iptables-L -n

ChainINPUT (policy ACCEPT)

target prot opt source destination

KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0

ChainFORWARD (policy DROP)

target protopt source destination

DOCKER-ISOLATION all -- 0.0.0.0/0 0.0.0.0/0

DOCKER all -- 0.0.0.0/0 0.0.0.0/0

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0

iptables 被kubernetes接管后的规则比较多,仔细看下FORWARD 规则就发现了,policy DROP状态,这就导致了我们直接访问node节点的IP加上端口会无法访问容器,问题出在iptables上就好解决了。

临时解决方案,直接设置FORWARD 全局为ACCEPT:

[root@k8s-master~]#iptables-P FORWARD ACCEPT

[root@k8s-master~]#iptables-L -n

ChainINPUT (policy ACCEPT)

target prot optsource destination

KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0

ChainFORWARD (policy ACCEPT)

这样需要在每台node上执行命令,并且重启node后规则失效;

永久解决方案,修改Docker启动参数,在[Service] 区域末尾加上参数:

[root@k8s-master~]#vim/usr/lib/systemd/system/docker.service

[Service]

............

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

[root@k8s-master~]#systemctldaemon-reload

[root@k8s-master~]#systemctlrestart docker

由于修改了systemctl 文件,所以需要重新载入,重启docker 就可以看到iptables规则已经加上ACCEPT all -- 0.0.0.0/00.0.0.0/0

[root@k8s-master~]# iptables -L -n

ChainINPUT (policy DROP)

target prot opt source destination

KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0

ChainFORWARD (policy ACCEPT)

target prot opt source destination

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0

三、创建 Pods (Containers)

为了创建一个pod,我们需要在kubernetes master上面定义一个yaml 或者 json配置文件。然后使用kubectl命令创建pod

[root@slave1~]# mkdir -p/k8s/pods

[root@slave1~]# cd/k8s/pods/

[root@slave1~]# catnginx.yaml

在nginx.yaml内容如下:

[root@node6717pods]# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

name: n

kubernetes-带你进入JAVA微服务架构的世界

kubernetes-带你进入JAVA微服务架构的世界