配置环境:

CentOS:

[root@node252 lib]# uname -a

Linux node252 3.10.0-957.el7.x86_64 #1 SMP Thu Nov 8 23:39:32 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

[root@node252 lib]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

[root@node252 lib]# rpm -qa | grep mongo

mongodb-org-mongos-4.0.18-1.el7.x86_64

mongodb-org-server-4.0.18-1.el7.x86_64

mongodb-org-shell-4.0.18-1.el7.x86_64

mongodb-org-tools-4.0.18-1.el7.x86_64

mongodb-org-4.0.18-1.el7.x86_64

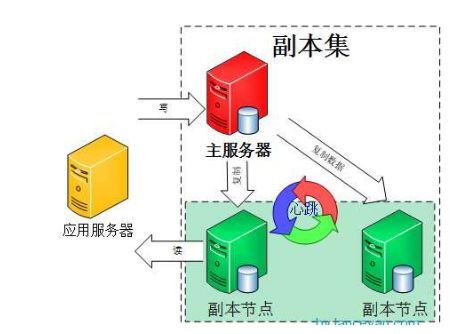

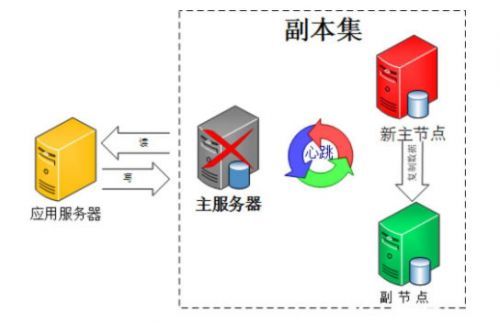

在mongodb副本集集群中,若是丢失了n/2+1个节点,也就是失去了多数节点时候,在集群内无法投票表决剩下的节点谁能当主库,那就需要强制其中一个节点为主库.

下面是操作步骤:

1.执行cfg=rs.conf()

2.需要设置需要的_id为主库,

cfg.members=[cfg.members[3]]

3.执行集群重新设置

4.rs.reconfig(cfg, {force: true});

5.rs.status()

下面是详细操作步骤,

该集群是四个节点的副本集,其中三个节点已经丢失,使剩下的节点为主节点,单实例运行,最后一个节点的状态将变成SECONDARY,上层应用将会法插入数,但可以读取数据。

执行cfg=rs.conf(),获取集群的节点信息:

cms:SECONDARY> cfg=rs.conf()

{

"_id" : "cms",

"version" : 32948,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 0,

"host" : "192.168.1.193:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.1.195:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.1.198:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 3,

"host" : "192.168.1.199:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("59cab0d69b86b9e815cfc02b")

}

}

下面指定199为主节点

cms:SECONDARY> cfg.members=[cfg.members[3]]

[

{

"_id" : 3,

"host" : "192.168.1.199:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

]

应用配置:

cms:SECONDARY> rs.reconfig(cfg, {force: true})

{ "ok" : 1 }

}

cms:PRIMARY> #这样应用就可以正常访问单主集群了

将单主集群重建副本集集群:

查看更改后的集群状态,操作完成,变成了单实例节点.然后通过添加从节点来实现副本集

cms:SECONDARY> rs.status()

{

"set" : "cms",

"date" : ISODate("2018-09-04T01:54:12.445Z"),

"myState" : 1,

"term" : NumberLong(6),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 3,

"name" : "192.168.1.199:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 3152420,

"optime" : {

"ts" : Timestamp(1536026052, 24),

"t" : NumberLong(6)

},

"optimeDate" : ISODate("2018-09-04T01:54:12Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1536026047, 1),

"electionDate" : ISODate("2018-09-04T01:54:07Z"),

"configVersion" : 137682,

"self" : true

}

],

"ok" : 1

}

如果从节点在运行,要强制停止从节点的服务:

在启动mongod进程前,必须要把新节点数据文件清空.

注意:如果有配置KEY认证,不能删除相应的文件夹:

#security:

security:

clusterAuthMode: keyFile

keyFile: /var/lib/mongo/key/repl_set.key

authorization: enabled

#operationProfiling:

清空SECONDARY节点的数据,(除key文件夹外):

[root@node251 ~]# cd /var/lib/mongo/

[root@node251 mongo]# ls

collection-0-1410376559665338345.wt diagnostic.data index-7-1410376559665338345.wt

collection-0-2428134951873617012.wt index-11-1410376559665338345.wt index-9-1410376559665338345.wt

collection-0--340382256029554464.wt index-1-1410376559665338345.wt journal

collection-10-1410376559665338345.wt index-1-2428134951873617012.wt key

collection-16-1410376559665338345.wt index-1--340382256029554464.wt _mdb_catalog.wt

collection-17-1410376559665338345.wt index-18-1410376559665338345.wt mongod.lock

collection-19-1410376559665338345.wt index-20-1410376559665338345.wt sizeStorer.wt

collection-21-1410376559665338345.wt index-22-1410376559665338345.wt storage.bson

collection-2-1410376559665338345.wt index-2-2428134951873617012.wt WiredTiger

collection-23-1410376559665338345.wt index-24-1410376559665338345.wt WiredTigerLAS.wt

collection-4-1410376559665338345.wt index-25-1410376559665338345.wt WiredTiger.lock

collection-6-1410376559665338345.wt index-3-1410376559665338345.wt WiredTiger.turtle

collection-8-1410376559665338345.wt index-5-1410376559665338345.wt WiredTiger.wt

[root@node251 mongo]# rm -rf ./*.wt

[root@node251 mongo]# ls

diagnostic.data journal key mongod.lock storage.bson WiredTiger WiredTiger.lock WiredTiger.turtle

[root@node251 mongo]# rm -rf mongod.lock storage.bson WiredTiger WiredTiger.lock WiredTiger.turtle

[root@node251 mongo]# rm -rf diagnostic.data journal

[root@node251 mongo]# ls

key

启动本地的mongodb进程:

systemctl start mongod

在主节点添加新的节点,指定priority,这个值一定要比主节点的priority低:

查看primary节点的priority值:

rs0:PRIMARY> rs.config();

{

"_id" : "cms",

"version" : 155928,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "192.168.1.199:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 10,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5ea6ea6c7b4b6caf94cb5ab3")

}

}

修改集群配置:

config = { _id:"cms", "protocolVersion" : 1,members:

[

{_id:0,host:"192.168.1.193:27017",priority:1},

{_id:1,host:"192.168.1.194:27017",priority:2},

{_id:2,host:"192.168.1.198:27017",priority:3},

{_id:3,host:"192.168.1.199:27017",priority:4}

]

}

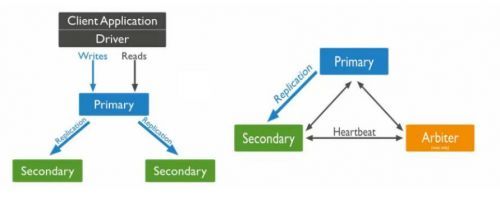

======一主一从一仲裁节点========

config = { _id : "rs0", "protocolVersion" : 1,members : [ {_id : 0, host : "172.16.1.250:27017","priority":3 }, {_id : 1, host : "172.16.1.251:27017","priority":1}, {_id : 2, host : "172.16.1.252:27017","arbiterOnly":true} ] };

# 重新配置副本集

rs.reconfig(config);

==============

rs.reconfig(config);

配置完成后,SECONDARY会自动拉取主库数据,然后创建索引.

MongoDB副本集强制其中一个节点为主库并重做副本集集群案例分享

MongoDB副本集强制其中一个节点为主库并重做副本集集群案例分享