kubernetes的最新版本已经到了1.20.x,利用假期时间搭建了最新的k8s v1.20.2版本,截止我整理此文为止,发现官方最新的release已经更新到了v1.21.2。

1、概述

1.1 在k8s中部署Prometheus监控的方法

通常在k8s中部署prometheus监控可以采取的方法有以下三种

· 通过yaml手动部署 (本文的部署方式)

· operator部署

· 通过helm chart部署

1.2 什么是Prometheus Operator

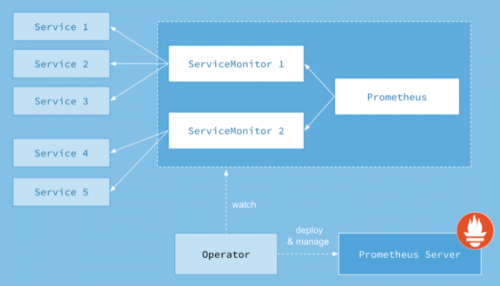

Prometheus Operator的本职就是一组用户自定义的CRD资源以及Controller的实现,Prometheus Operator负责监听这些自定义资源的变化,并且根据这些资源的定义自动化的完成如Prometheus Server自身以及配置的自动化管理工作。以下是Prometheus Operator的架构图

1.3 为什么用Prometheus Operator

由于Prometheus本身没有提供管理配置的AP接口(尤其是管理监控目标和管理警报规则),也没有提供好用的多实例管理手段,因此这一块往往要自己写一些代码或脚本。为了简化这类应用程序的管理复杂度,CoreOS率先引入了Operator的概念,并且首先推出了针对在Kubernetes下运行和管理Etcd的Etcd Operator。并随后推出了Prometheus Operator

1.4 kube-prometheus项目介绍

prometheus-operator官方地址:https://github.com/prometheus-operator/prometheus-operator

kube-prometheus官方地址:https://github.com/prometheus-operator/kube-prometheus

两个项目的关系:前者只包含了Prometheus Operator,

后者既包含了Operator,又包含了Prometheus相关组件的部署及常用的Prometheus自定义监控,具体包含下面的组件

· The Prometheus Operator:创建CRD自定义的资源对象

· Highly available Prometheus:创建高可用的Prometheus

· Highly available Alertmanager:创建高可用的告警组件

· Prometheus node-exporter:创建主机的监控组件

· Prometheus Adapter for Kubernetes Metrics APIs:创建自定义监控的指标工具(例如可以通过nginx的request来进行应用的自动伸缩)

· kube-state-metrics:监控k8s相关资源对象的状态指标

· Grafana:进行图像展示

2、环境介绍

本文的k8s环境是通过kubeadm搭建的v 1.21.2版本,由1master+1node组合

持久化存储为NFS存储

[root@k8smaster ~]# kubectl version -o yaml

clientVersion:

buildDate: "2021-06-16T12:59:11Z"

compiler: gc

gitCommit: 092fbfbf53427de67cac1e9fa54aaa09a28371d7

gitTreeState: clean

gitVersion: v1.21.2

goVersion: go1.16.5

major: "1"

minor: "21"

platform: linux/amd64

serverVersion:

buildDate: "2021-06-16T12:53:14Z"

compiler: gc

gitCommit: 092fbfbf53427de67cac1e9fa54aaa09a28371d7

gitTreeState: clean

gitVersion: v1.21.2

goVersion: go1.16.5

major: "1"

minor: "21"

platform: linux/amd64

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8snode Ready <none> 9d v1.21.2

node Ready control-plane,master 9d v1.21.2

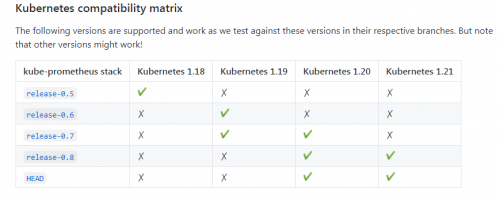

kube-prometheus的兼容性说明(https://github.com/prometheus-operator/kube-prometheus#kubernetes-compatibility-matrix),按照兼容性说明,部署的是最新的release-0.8版本

3、清单准备

从官方的地址获取最新的release-0.8分支,或者直接打包下载release-0.8

➜ git clone https://github.com/prometheus-operator/kube-prometheus.git

➜ git checkout release-0.8

或者

➜ wget -c https://github.com/prometheus-operator/kube-prometheus/archive/v0.8.0.zip

[root@k8smaster monitor]# tar xf kube-prometheus-0.8.0.tar.gz

[root@k8smaster monitor]# ls

grafana ingress.yaml kube-prometheus-0.8.0 kube-prometheus-0.8.0.tar.gz prometheus

[root@k8smaster monitor]# cd kube-prometheus-0.8.0/manifests/

[root@k8smaster manifests]# ls

alertmanager-alertmanager.yaml node-exporter-clusterRoleBinding.yaml

alertmanager-podDisruptionBudget.yaml node-exporter-clusterRole.yaml

alertmanager-prometheusRule.yaml node-exporter-daemonset.yaml

alertmanager-secret.yaml node-exporter-prometheusRule.yaml

alertmanager-serviceAccount.yaml node-exporter-serviceAccount.yaml

alertmanager-serviceMonitor.yaml node-exporter-serviceMonitor.yaml

alertmanager-service.yaml node-exporter-service.yaml

blackbox-exporter-clusterRoleBinding.yaml prometheus-adapter-apiService.yaml

blackbox-exporter-clusterRole.yaml prometheus-adapter-clusterRoleAggregatedMetricsReader.yaml

blackbox-exporter-configuration.yaml prometheus-adapter-clusterRoleBindingDelegator.yaml

blackbox-exporter-deployment.yaml prometheus-adapter-clusterRoleBinding.yaml

blackbox-exporter-serviceAccount.yaml prometheus-adapter-clusterRoleServerResources.yaml

blackbox-exporter-serviceMonitor.yaml prometheus-adapter-clusterRole.yaml

blackbox-exporter-service.yaml prometheus-adapter-configMap.yaml

grafana-dashboardDatasources.yaml prometheus-adapter-deployment.yaml

grafana-dashboardDefinitions.yaml prometheus-adapter-roleBindingAuthReader.yaml

grafana-dashboardSources.yaml prometheus-adapter-serviceAccount.yaml

grafana-deployment.yaml prometheus-adapter-serviceMonitor.yaml

grafana-serviceAccount.yaml prometheus-adapter-service.yaml

grafana-serviceMonitor.yaml prometheus-clusterRoleBinding.yaml

grafana-service.yaml prometheus-clusterRole.yaml

kube-prometheus-prometheusRule.yaml prometheus-operator-prometheusRule.yaml

kubernetes-prometheusRule.yaml prometheus-operator-serviceMonitor.yaml

kubernetes-serviceMonitorApiserver.yaml prometheus-podDisruptionBudget.yaml

kubernetes-serviceMonitorCoreDNS.yaml prometheus-prometheusRule.yaml

kubernetes-serviceMonitorKubeControllerManager.yaml prometheus-prometheus.yaml

kubernetes-serviceMonitorKubelet.yaml prometheus-roleBindingConfig.yaml

kubernetes-serviceMonitorKubeScheduler.yaml prometheus-roleBindingSpecificNamespaces.yaml

kube-state-metrics-clusterRoleBinding.yaml prometheus-roleConfig.yaml

kube-state-metrics-clusterRole.yaml prometheus-roleSpecificNamespaces.yaml

kube-state-metrics-deployment.yaml prometheus-serviceAccount.yaml

kube-state-metrics-prometheusRule.yaml prometheus-serviceMonitor.yaml

kube-state-metrics-serviceAccount.yaml prometheus-service.yaml

kube-state-metrics-serviceMonitor.yaml setup

kube-state-metrics-service.yaml

修改yaml,增加prometheus和grafana的持久化存储

vim manifests/prometheus-prometheus.yaml

45 serviceAccountName: prometheus-k8s

46 serviceMonitorNamespaceSelector: {}

47 serviceMonitorSelector: {}

48 version: 2.26.0

49 retention: 3d

50 storage:

51 volumeClaimTemplate:

52 spec:

53 storageClassName: prometheus-nfs

54 resources:

55 requests:

56 storage: 10Gi

vim manifests/grafana-deployment.yaml

127 securityContext:

128 fsGroup: 65534

129 runAsNonRoot: true

130 runAsUser: 65534

131 serviceAccountName: grafana

132 volumes:

133 #- emptyDir: {}

134 # name: grafana-storage

135 - name: grafana-pvc

136 persistentVolumeClaim:

137 claimName: grafana-pvc

138 - name: grafana-datasources

139 secret:

140 secretName: grafana-datasources

141 - configMap:

新增granafa,prometheus两个NFS存储PV,PVC

前题是NFS环境已配置完成:

Prometheus 共享文件系统配置:

[root@k8smaster prometheus]# cat prometheus_pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: prometheus-nfs

nfs:

path: /nfsdata/prometheus

server: 192.168.130.103

[root@k8smaster prometheus]# cat prometheus_pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: prometheus-nfs

grafana 共享文件系统配置:

[root@k8smaster grafana]# cat grafana_pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: grafana-nfs

nfs:

path: /nfsdata/grafana

server: 192.168.130.103

[root@k8smaster grafana]# cat grafana_pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: grafana-nfs

4、开始部署

部署清单

[root@k8smaster grafana]# kubectl create -f grafana_pv.yaml

[root@k8smaster grafana]# kubectl create -f grafana_pvc.yaml

[root@k8smaster prometheus]# kubectl create -f prometheus_pv.yaml

[root@k8smaster prometheus]# kubectl create -f prometheus_pvc.yaml

因为国内一些镜像无法下载,需要到国外下载后打包,镜像清单如下:

[root@k8smaster images]# ls

directxman12_k8s-prometheus-adapter:v0.8.4.tar.gz

grafana_grafana_7.5.4.tar.gz

jimmidyson_configmap-reload:v0.5.0.tar.gz

k8s.gcr.io_kube-state-metrics_kube-state-metrics_v2.0.0.tar.gz

kube-prometheus-0.8.0.tar.gz

quay.io_brancz_kube-rbac-proxy_v0.8.0.tar.gz

quay.io_brancz_kube-rbac-proxy:v0.8.0.tar.gz

quay.io_prometheus_alertmanager:v0.21.0.tar.gz

quay.io_prometheus_blackbox-exporter:v0.18.0.tar.gz

quay.io_prometheus_node-exporter_v1.1.2.tar.gz

quay.io_prometheus-operator_prometheus-operator_v0.47.0.tar.gz

quay.io_prometheus_prometheus_v2.26.0.tar.gz

通过以下命令实现镜像的导入导出:

docker save -o quay.io_prometheus_prometheus_v2.26.0.tar quay.io/prometheus_prometheus:v2.26.0

gzip quay.io_prometheus_prometheus_v2.26.0.tar

通过yaml文件安装:

[root@k8smaster monitor]# cd kube-prometheus-0.8.0/

[root@k8smaster kube-prometheus-0.8.0]# ls

build.sh examples jsonnet Makefile sync-to-internal-registry.jsonnet

code-of-conduct.md experimental jsonnetfile.json manifests tests

DCO go.mod jsonnetfile.lock.json NOTICE test.sh

docs go.sum kustomization.yaml README.md

example.jsonnet hack LICENSE scripts

[root@k8smaster kube-prometheus-0.8.0]#kubectl create -f manifests/setup

[root@k8smaster kube-prometheus-0.8.0]#kubectl create -f manifests/

查看状态

[root@k8smaster ~]# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 175m

alertmanager-main-1 2/2 Running 0 175m

alertmanager-main-2 2/2 Running 0 175m

blackbox-exporter-55c457d5fb-dwmrk 3/3 Running 0 175m

grafana-6dd5b5f65-w75kp 1/1 Running 0 175m

kube-state-metrics-76f6cb7996-znv85 3/3 Running 0 165m

node-exporter-772sf 2/2 Running 0 175m

node-exporter-r8vkh 2/2 Running 0 175m

prometheus-adapter-59df95d9f5-k49cr 1/1 Running 0 175m

prometheus-adapter-59df95d9f5-pz6h4 1/1 Running 0 175m

prometheus-k8s-0 2/2 Running 1 175m

prometheus-k8s-1 2/2 Running 1 175m

prometheus-operator-7775c66ccf-92qxf 2/2 Running 0 176m

[root@k8smaster ~]# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main ClusterIP 10.96.132.86 <none> 9093/TCP 175m

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 175m

blackbox-exporter ClusterIP 10.103.8.64 <none> 9115/TCP,19115/TCP 175m

grafana ClusterIP 10.97.116.67 <none> 3000/TCP 175m

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 175m

node-exporter ClusterIP None <none> 9100/TCP 175m

prometheus-adapter ClusterIP 10.106.82.222 <none> 443/TCP 175m

prometheus-k8s ClusterIP 10.110.18.245 <none> 9090/TCP 175m

prometheus-operated ClusterIP None <none> 9090/TCP 175m

prometheus-operator ClusterIP None <none> 8443/TCP 176m

为prometheus、grafana、alertmanager创建ingress

manifests/other/ingress.yaml

[root@k8smaster monitor]# cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prom-ingress

namespace: monitoring

annotations:

kubernetes.io/ingress.class: "nginx"

prometheus.io/http_probe: "true"

spec:

rules:

- host: alert.k8s-1.21.2.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: alertmanager-main

port:

number: 9093

- host: grafana.k8s-1.21.2.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: 3000

- host: prom.k8s-1.21.2.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus-k8s

port:

number: 9090

至此,通过kube-prometheus部署k8s监控已经基本完成了

使用kube-prometheus部署k8s-1.21.2监控(最新版)

使用kube-prometheus部署k8s-1.21.2监控(最新版)

1.21版本不是移除了selflink的支持嘛 博主的nfs是如何操作可以正常使用的 可以教下嘛

1.21版本不是移除了selflink的支持嘛 博主的nfs是如何操作可以正常使用的 可以教下嘛